Have you ever scrolled through Instagram and accidentally come across something a bit more revealing than you expected and think to yourself, does instagram allow nudes?’ You will be shocked to learn that, based on the platform’s policy, the answer to this question is categorical no; so, why do quick glimpses of the skin still manage to slip through the cracks? Fasten your seat belts because it is time to wade into the wild, weird, and wondrous world of Instagram’s lingering nudity problem and its enforcement strategy.

Does Instagram allow nudes?

Instagram, the social media giant, is a social networking platform that does not allow nudes.

This policy is in place to protect its users, especially children, from exposure to inappropriate content.

Here we have summarized what is and isn’t allowed on Instagram regarding nudity:

Prohibited content:

- Full nudity: This means that genitals, full buttocks, or female nipples cannot be depicted in any sort of image or video.

- Partial nudity: Sexual intercourse scenes and actions are strictly forbidden, even if the genitals are not shown on camera.

- Sexually suggestive content: Any sexually suggestive material or suggestive positioning, even if the person is not completely naked, is prohibited.

- Child nudity: Sexually explicit or depicting nudity, especially involving minors, is prohibited and can be reported to the authorities.

Allowed exceptions:

However, as a rule, there are several exceptions to this general claim. These include:

- Breastfeeding photos: Images show mothers breastfeeding their children.

- Post-mastectomy scarring: Photos show post-mastectomy scars.

- Health-Related Images: Images taken after surgeries or in other health-related contexts.

- Artistic Representations: Sculptures, paintings, and other artworks depict nudity.

Instagram’s zero-tolerance policy for nudity helps maintain a safe environment for all users. For more updates, you can read Instagram’s Community Guidelines.

Use Tracker to monitor what your loved ones post on Instagram

Does Instagram delete inappropriate photos?

Instagram works hard to delete obscene images, such as those that violate their no-nudity policy.

To identify cases of nudity, Instagram relies on the following approaches:

- User reporting system: Instagram depends on individuals to report obscene content since it cannot monitor all uploaded material.

- AI and machine learning: Self-service technologies look for obscenity or other forms of nudity in photographs.

The content that gets reported is moderated by real people, who make the final decisions regarding vague content.

When a photo is identified as inappropriate, the platform enforces its policies in the following ways:

- Content removal: Instagram deletes it from the application. This may be done through automated analysis or by following a complaint by users of the application.

- Account warnings: Instagram may send warnings to users whose contents are contrary to the set policies and guidelines.

- Account suspension or deletion: Constant infringement of these policies results in short-term or even long-term account removal.

- Algorithmic suppression: It would reduce the visibility in the feed and explore pages of content that could be considered borderline inappropriate.

As much as Instagram tries to maintain equal levels of enforcement, it loses ground to-the ever-increasing content shared every day.

Every now and then, a few strict or obscene pictures can sneak in, and at other times, innocent items can be deleted.

Instagram is constantly tweaking its procedure in a bid to enhance efficiency and reliability in moderating content.

What are Instagram’s community guidelines?

As mentioned, Instagram does not allow nudes. And Instagram’s policies governing conduct are surprisingly numerous and comprehensive, all aimed at creating a healthy environment for all parties involved.

Here’s a breakdown focusing on nudity and inappropriate content:

1. Nudity: Instagram prohibits nudity. This includes photographs and videos depicting genitals, buttocks, or female nipples. Painting and sculptures are allowed as a form of art.

2. Sexually suggestive content: Promiscuous content or content that has some suggestion of nudity is also prohibited. This could include pictures where people are dressed in a revealing manner or posing provocatively.

3. Inappropriate content: Apart from censorship of nudity, Instagram has signaled that it has plans to prevent the sharing of material that might potentially disturb users. This encompasses a broad range of topics, including:

- Violence and hate speech

- Self-harm and suicide

- Bullying and harassment

- The further usage of contraband and restricted merchandise

- Spam and misleading content

Thus, by providing these guidelines, Instagram strives to allow the audience to share their imagination and creativity while avoiding offending content.

How does Instagram detect nudity?

Since Instagram does not allow nudes, it employs a two-pronged approach to detect nudity: a dynamic integration of automated detection and user reports.

Automated detection:

Instagram employs machine learning-based models fed with large datasets to detect nudity in uploaded images.

These algorithms consider characteristics such as skin coloration, shape matters, and context to identify possibly obscene material.

This system gets better with advances, but it is not infallible. However, partially obscuring the face or creating artistic covering elements can sometimes do the trick and leave the AI system out.

User reporting:

Instagram allows users to be active participants in helping the platform remain safe. If a photo violates the app’s rules and regulations, users can report it within the app itself.

This alerts a team of moderators who first evaluate the image and then act accordingly.

This could be done by retracting the picture, sending an utmost warning to the user, or even deactivating the account for such incidents in the future.

However, this dual system has its limitations:

1. False positives: Machine learning algorithms are not fully perfected yet, and occasionally, they misidentify non-violent posts as such. This can be irritating for the end users and signifies the need for an adequate degree of automation and sufficient control by the review team.

2. Subjectivity: While one might find nudity in art harmless, another might deem it inappropriate. This points to subjectivity in determining facial recognition failure, making it even harder to set a standard for automated detection. It underlines the significance of the role of the final human reviewers.

How to report inappropriate content on Instagram?

Instagram empowers users to play an active role in maintaining a safe and positive online environment.

If you encounter content that violates their community guidelines, including nudity or other inappropriate material, you can easily report it within the app.

1. Locate the offending content: Whether it’s a post, story, or comment, find the content you wish to report.

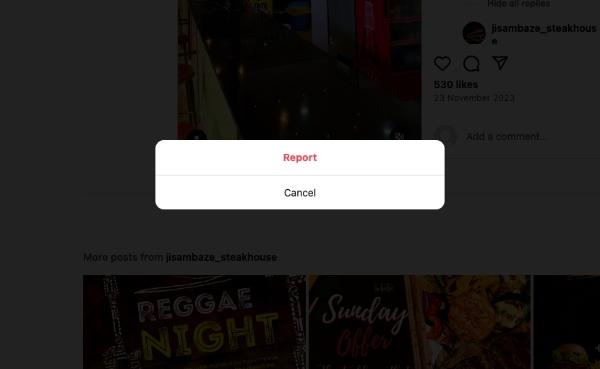

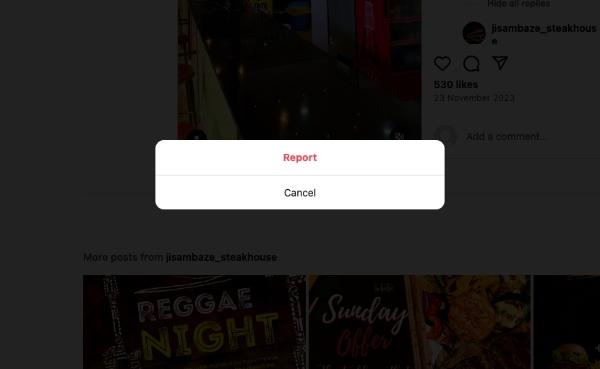

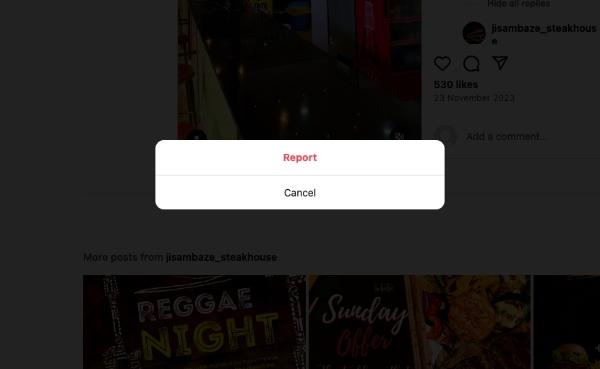

2. Tap the three dots (…): This menu button is usually located in the top right corner of the post or comment.

3. Select “Report.” A list of reporting options will appear.

4. Choose the most relevant reason: Instagram provides various reporting options like “Nudity and sexual activity,” “Hate speech or bullying,” or “Self-harm.” Select the category that best describes the violation.

5. Provide additional details: You can provide more specific details about your reporting content, which can help reviewers better understand the situation.

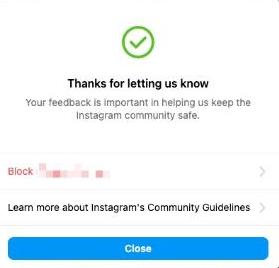

6. Submit the report: Once you’ve selected your reason and provided any additional information, confirm your report submission.

By following these steps and actively reporting inappropriate content, you contribute to a more positive and secure Instagram experience for yourself and the entire community.

How to monitor your kid’s Instagram?

A parental control app can also help monitor children’s general activity on Instagram and filter out obscene content. FlashGet Kids is one such app that has been developed to assist you in controlling your child’s device usage and safety online. While FlashGet Kids doesn’t directly access message content, it can help with monitoring inappropriate content in a few ways:

- App Usage Tracking: FlashGet Kids has a feature that allows you to monitor the time spent on a specific app, including Instagram. This may help to get an idea of their overall use of Instagram and where the risk of too much screen time lies.

- Notifications: You will also be able to set notifications to alert if your child has spent a specific amount of time on Instagram or in case if he or she is using this application after certain hours.

- App Blocker: With FlashGet Kids, you can block different applications, including applications notorious for hosting sleazy content. This can indirectly limit their exposure to potentially dangerous areas of the internet.

- Content monitoring. FlashGet Kids allows you to monitor what content they are surfing on Instagram by setting up keyword detection.

- Usage Reports: FlashGet Kids provides a report on your kids’ app usage. It will not display detailed activity on Instagram, but it can uncover more general tendencies.

How to use FlashGet Kids?

- Download install FlashGet Kids on parent’s device

- Install FlashGet Kids for child on child’s device.

- Create an account and bind the device.

- Give necessary permission and configure the settings.

- Tap relevant features on the dashboard to use notifications, app blocker and content monitoring.

Thus, by integrating FlashGet Kids and Instagram’s parental controls, you create a multi-layered approach to forming a safe environment for your child on the Web.

Conclusion

Instagram has remained very clear that it does not allow nudes on its platform, but the company still struggles to implement this policy. The problem of moderating the remarkable volume of user-generated content speaks volumes of the difficulties of sustaining the family filter in the Internet age. Although Instagram uses complex algorithms and community reporting to fight nudity, other services, such as FlashGet Kids, can assist in protecting your child. While the platform is responsible for maintaining and promoting the community guidelines, the users are also responsible for ensuring that they create a healthy and courteous environment for everyone.

FAQs

What happens if you violate Instagram community guidelines once?

First-time offenders of Instagram’s community guidelines may be given a warning. This specific content will be deleted, and you will receive information about violating the rules. Further violations can result in temporary suspension or a lifelong ban on the account.

Does Instagram censor stories?

Yes, Instagram does censor stories. It employs machine learning to flag any violative content and, subsequently, delete it. Users can also flag stories that they feel are not suitable, and such stories are moderated.

Why are some words censored on Instagram?

Instagram blocks some words to protect users and uphold decency. These include obscene language, bigotry, and words linked to criminal conduct. The site relies on automatic filters and individual complaints to determine which words might be hurtful.

Can you put content restrictions on Instagram?

Yes, you can limit the content on Instagram. In the account settings, there is an option, ‘Sensitive Content Control, ‘ that you can activate to avoid viewing some upsetting content.